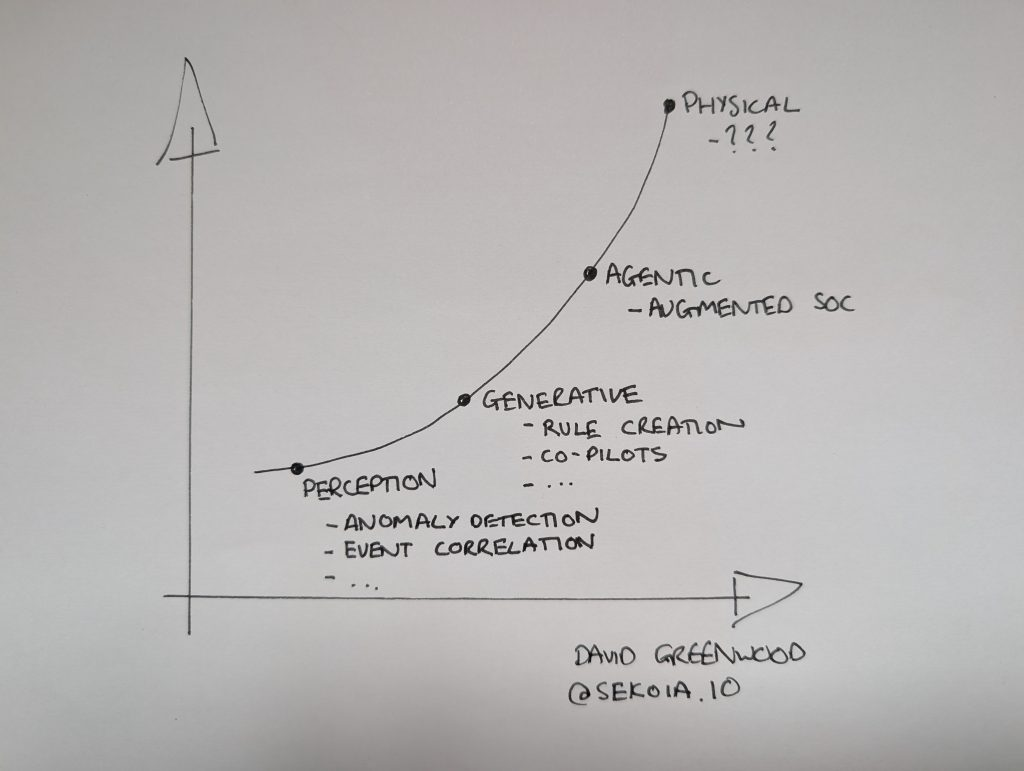

- Perception AI helps us to understand text, speech, and images

- Generative AI helps us to generate text, speech, pictures, and videos

- Agentic AI can perceive, reason, plan, and act

- Physical AI can interact with the physical world

The Sekoia platform has always been at the bleeding edge of each AI wave, empowering SOC teams to be more efficient.

Often, as time passes, we forget just how quickly technology changes both our daily and working lives. As we enter the Agentic AI era, I wanted to take a reflective look at how the various waves of AI have changed how SOC teams work.

Perception AI

By extracting meaning from raw data, Perception AI allows computers to interpret and label the world around us.

It’s very likely you use perception AI in your personal life everyday, from speech recognition assistants like Apple’s Siri or Amazon Alexa, or image recognition such as in Apple Photos or Google images.

Inside the AI SOC, Perception AI plays a significant role by enhancing the detection, analysis, and response to security threats.

Anomaly Detection

In the fledgling days of SIEM, detection rules were very simple. For example, looking for error codes or warning messages.

Time-to-detection was, and still remains one of the key metrics a lot of SOC teams measure. Anomaly detection was introduced in the first generation of AI SOCs, and delivered the first significant leap in enhancing the ability to surface security incidents in data lakes.

Using machine learning models, a baseline for normal network activity can be established. Perception AI can then identify deviations from this baseline, flagging potential security threats or unusual activities for further investigation.

To illustrate a real example, the following graph taken from the Sekoia platform shows the output of an anomaly detection rule that monitors the volume of services installed a systems, indicative of potential malicious activity, perhaps malware or the installation of benign tools to help exfiltrate data.

You can read more about Anomaly Detection rule in the Sekoia platform here.

Event Correlation

Detecting anomalous activity is useful. Understanding what happened in relation to the anomalous activity makes triaging much more effective.

To develop the previous example further, this could include considering other events related to an installation of services, for example, escalating a user’s privileges.

Event correlation allows SOC teams to observe the context of an event, or put another way, the big picture of an incident. This alone has made significant strides in reducing the volume of benign alerts that once overwhelmed SOC teams.

Perception AI has proved a game changer to the SOC in this regard because of the scale at which it can operate. It can link seemingly disparate events across vast and complex IT environments, where terabytes of generated data is now the norm.

One really cool example I’ve seen employed here is using Perception AI to track an actor inside a network and respond accordingly.

You can read more about the Cases in the Sekoia platform here.

Ultimately being able to understand what has been done, what is currently underway, and therefore what might happen next at massive scale, has addressed another key SOC metric, time to resolution.

Generative AI

Generative AI, hallmarked by the arrival of ChatGPT in November 2022, followed by a host of other AI tools across image, voice and video.

When people talk about AI today, it is likely they are referring to Generative AI. Generative AI refers to systems that create new content, such as text, images, and videos.

Generative AI has largely been made possible by the advances in computing, and thus the reduction in compute cost. Many public AI models have been trained on billions of web pages, possessing a good level of general knowledge. We’re now entering a phase where more specialised models in domains like security are starting to become increasingly powerful.

In the last three or so years, the Generative phase has seen a blend of astonishment and disappointment in equal measure to the AI SOC. It has taken a while, but the security industry is realising how Generative AI solves real problems, instead of just fancy keynote demos.

Detection Rule Generation

I previously talked about anomaly detection in the Perception AI era. The reality was human knowledge was still required to actually write the logic of the initial detection rule, for example, writing it to monitor installed services.

What I didn’t mention was writing detection hypotheses, aka what you want to detect, is often the hardest part of the task. To do this requires to theorise what constitutes something worthy of a security incident. Then even if you have this nailed down, turning that into a fully formed rule in the right structure is tough.

You can read more about the types of detection rules available in the Sekoia platform here.

Generative AI is now aiding detection rule creation and maintenance in the SOC at a scale never seen before. Instead of out-of-date alerts bogging down security tooling, Generative AI has the ability to adapt and enhance alerts as threats evolve.

Security Co-pilots

Security vendors have gone one of two ways when it comes to selling the efficiencies AI brings. “Replace your SOC team with AI”, or “Give your SOC teams a helping hand”.

We’re firmly in the latter camp.

From my experience, the most valuable security tools don’t replace human judgment – they amplify it by removing the friction that prevents that judgment from being applied efficiently.

By providing teams with the right information – intelligence, enrichment, guidance – based on a large corpus of knowledge, Generative AI is transforming how SOC teams work by supporting them from detection through to response.

You can read more about the Sekoia platforms AI assistant, ROY, here.

Chat interfaces are a really good example of that, allowing teams to quickly surface information they need to make better, more timely decisions.

Agentic AI

And so we arrive at the Agentic era.

Whilst Generative AI has proven itself to be wildly successful at enhancing productivity of analytical tasks concerning data, it still fundamentally requires a human to provide guidance and instruction to achieve the desired results, often, frustratingly after many failed attempts.

Agentic AI changes that.

When we say ‘Agentic’ what we mean is a phase of AI which utilises ‘agents’, but what are agents?

AI Agents, act on behalf of someone, with the ability to take action or choose what actions to take. Instead of simply generating an output based on human instruction, AU Agents are autonomous to decide the logic to decide their own instructions within their scope.

For example, an agent given the scope of writing blog posts would come up with a post idea, research the topic, create the content, publish the post and then promote it. All without any human input.

Thus we enter into an autonomous world. One where certain tasks are decided and acted upon, automatically, by artificial intelligence. Such tasks and processes are therefore ‘Agentic’.

In the very near future, we will see a world of augmented SOCs, whereby humans work alongside their AI colleagues.

Each AI agent has a clearly defined job specification where their scope and level of autonomy is clearly defined. In many ways, a SOC manager’s job will evolve to include nurturing the success of their agents, much like their human employees.

Here are some Agents I believe are perfectly suited for the human-in-the-loop AI SOC:

Threat Hunter Agent

Threat Hunter Agents will be proactive in trying to identify incidents as early as possible.

In addition to creating and maintaining detection and hunting content, the Threat Hunter Agent will continually be updating its knowledge about the attack surface and external threats to identify new attack vectors to test and monitor.

This agent will not only create alerts, but also work with other teams, like vulnerability management teams to help them to better prioritise their work.

Alert Triage Agent

Alert Triage Agents would be responsible for alert grouping and triage.

For example, enriching alerts with threat intelligence or asset data.

Alert Triage Agents work alongside human Analysts.

After the Triage Agent completes its work, human Analysts have all the relevant information to determine if the alert requires additional investigation (which the Alert Triage agent can be instructed to undertake) or iof the incident should be escalated to the response team.

Response Agent

Response Agents works inside the Incident Management team to determine and handle the execution of response actions.

In collaboration with human incident responders, and the historic knowledge of past incidents, the response agent will be able to guide or execute the correct actions to remediate a threat.

A Response Agent works with operations teams to also help them improve their workflows, including advising on missing detections or triage steps that could be useful to support their work, for example.

Physical AI

Hopefully at this point in time, work as we know it will be a thing of the past, and I’ll be playing golf with my AI robot friends.